Yi-Lun Wu (Velen)

Summary

- 7+ years of experience in big data fields both Cloud(GCP, AWS) and on-premised (Cloudera CDH).

- Develop data catalog with hybrid cloud environment(on-perm+AWS) for global commerce at Yahoo.

- Lead a machine learning team to build up offline/real-time platforms for recommendation systems from scratch at Garena.

- Lead and architect large-scale data pipelines/warehouses from Innova Solutions(AWS) and 17LIVE (GCP). And Cooperate with data science/machine learning team/TW HQ data team.

- Expert of Hadoop ecosystems such as HDFS/Hive/Hbase/Spark in Athemaster and Xuenn.

Sr. Big Data Engineer / Team Lead

Taoyuan,TW

[email protected]

Skills

Languages

- Python

- SQL

- Linux Shell Script

- Scala

Big Data Solutions

- Cloudera CDH

- Hadoop echosystems

- AWS EMR

- GCP Dataporc

Data Warehouse

- Hive

- Google BigQuery

- MySQL

- HBase

- Clickhouse

- AWS RDS

- AWS Athena

ETL Skills in Big Data

- Spark / Spark Streaming

- Hive (for ELT)

- Impala

- Kafka

- Cloud DataFlow (GCP)

Workflow Skills

- Airflow

- Digdag

- NiFi

- AWS CloudFormation

Other Skills

- Great communication

- Leaderships

- Scrum

- JIRA

- Linux

- Git

Experience

Senior Engineer at Yahoo.Jan, 2023 - Present

Working at Yahoo. The greatest challenge lies in developing in response to rapid market changes, where the data catalog needs to integrate with various complex systems. Simultaneously, maintaining the highest stability and ensuring high-quality data is essential.

More responsibilities/details as below.

- Fetch providers data with various way via Java. Such as fetching data from client's API, GraphQL, FTP, S3 or GCP... etc.

- standardizing data from above to feed into data warehouse in Hadoop/Hive/HBase using Spark.

- Implement a checking system to guarantee high quality data via Java.

- Migrate part of services from on-perm(Hadoop) to cloud(AWS) to become a hybrid cloud environment.

Senior Data Engineer/ Team Lead, at BOOYAH! Live Garena.Oct, 2021 - Sep, 2022

Working at ML team as a first data engineer. The challenge include build up data warehouse/pipeline from scratch. And design the data flow to support both batch/real-time recommendation systems.

More responsibilities/details as below.

- Design data model from scratch and Manage Hadoop based data warehouse for the training system.

- Develop streaming ETL pipeline via Spark from Message queue(Kafka) into in-memory data structure store(Redis) and ClickHouse for real-time recommendation system.

- Design ELT job for offline report system in Hadoop/Hive using Spark.

- Build up monitoring dashboard on Grafana.

- Take leadership on TW side.

Senior Data Engineer at 17 Media.Jun, 2020 - Oct, 2021

The big challenge of 17 Media data teams is facing fast-growing data volume (processing 5-10x TB level daily), complex cooperation with stakeholders, the cost optimization of pipeline and refactor big latency systems .etc.

More responsibilities/details as below.

- Manage Google BigQuery based data warehouse/lake.

- Refactory architecture of data warehouse to enhance 2x performance.

- Develop batch/streaming ETL pipeline to process data from diverse data sources(e.g. MongoDB, MySQL, APIs) into GCP.

- Design workflow using Digdag.

- Implement CI/CD on BIgQuery.

- Build up visualization tool(Superset) via Kubernetes.

- Well leadership and guiding junior members.

Senior Software Engineer at Innova Solutions Ltd.Oct, 2018 - May, 2020

Development Intelligent Healthcare Data Platform(IHDP) for empowering compony solutions using AWS service.

More responsibilities/details as below.

- Build APIs to the processing of patient records and providing access for downstream usages.

- Build Infrastructure on AWS and compliance for HIPPA and GDPR standard.

IT Consultant at Xuenn Pte Ltd.May, 2018 - Sep, 2018

The biggest challenge in Xuenn is facing performance issues in the original data warehouse. And I lead a project to build up a Hadoop cluster to reduce original EDW loading and improve various data pipelines.

More responsibilities/details as below.

- Perform adopting new technologies to implant into, to fuse into or to replace with existing systems for gaining leaps in performance, benefits and capabilities of the users.

- Building-up multiple systems and integrating with existing system, implemented Hadoop, data mining or data warehouse systems.

- Design an architecture which processing real-time data end-to-end using various Hadoop solutions without coding.

Software Engineer at Athemaster Co., Ltd.Jan, 2016 - Apr, 2018

Athemaster is a technology company offering solutions and expertise in implementing Enterprise Data Hub and automating Data integration with Open Source technologies such as Apache Hadoop and Spark.

More responsibilities/details as below.

- Focus on Enterprise Big Data solution such as Hadoop and Spark (Cloudera CDH).

- Maintain and improved other companies' Hadoop cluster.

- Help other companies integrate with Hadoop and resolved the technical issues.

- Build data pipelines via python ETL data to Hadoop.

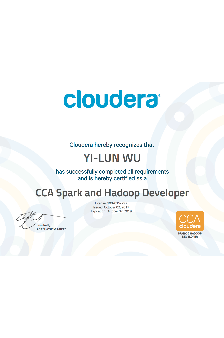

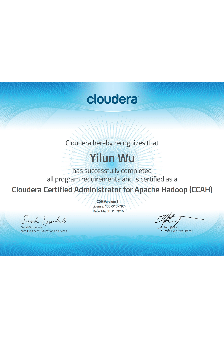

Certification and License

CCA-175: CCA Spark and Hadoop Developer

Education

Undergraduate studies at Tamkang University, with a concentration in Department of Management Sciences.

2008 - 2012